Navigating The Storm: Understanding the Potential Threat Landscape of Cloud GenAI Services

The blog post is written in collaboration with European Champions Alliance, a non-profit initiative on a mission to solidify Europe’s position in the global technological landscape.

The rapid adoption of generative AI (GenAI) in the cloud transforms industries, unlocking unprecedented potential for creativity, automation, and innovation. GenAI services are becoming indispensable tools for businesses of all sizes, from crafting compelling marketing copy to generating complex code.

However, this powerful technology also introduces a new and evolving threat landscape that organizations must understand and address proactively. As many increasingly rely on cloud-based GenAI services, it's crucial to acknowledge and mitigate the potential risks that accompany this transformative journey. Ignoring these threats could lead to significant security breaches, data loss, reputational damage, and financial repercussions.

The Threat Landscape of Cloud GenAI Services Is Evolving Rapidly

As GenAI technology will become a mainstream technology in the next few years due to the increasing demands and requirements for implementing GenAI services, the threat landscape associated with GenAI will also rapidly evolve. This will lead to an increasing number of threats and the variety of threats targeting the GenAI services

There are two main reasons why the GenAI threat landscape is something to be cautious about in the next few years:

- AI Is The New IP: Similar to confidential data and services hosted in the cloud, the AI model, assets, and workload are the new intellectual property that needs to be protected from potential cyber threats. If they are somehow leaked to the public or used by unauthorized users, this could cause the organizations to lose the novelty of their AI system, especially in the rapid AI development race.

- Lack of Security Awareness: Many organizations are currently in the initial adoption stage of GenAI technology, exploring the potential and challenges of GenAI within the organization. However, they do not realize that they are responsible for ensuring the AI system is correctly and securely configured, similar to the cloud computing’s shared responsibility model. 47% of organizations do not have a specific cybersecurity strategy to protect their AI system, while 62% of companies do not have a privacy policy specifically for AI.

Examples of Cloud GenAI Threat Landscape

The unique characteristics of GenAI models and their deployment in the cloud create novel attack vectors. Here are some key potential threats:

Prompt Injection & Jailbreaking

Prompt Injection occurs when users’ prompt inputs are deliberately or inadvertently incorrectly passed to other parts of the GenAI model. Jailbreaking, on the other hand, is a deliberate attempt to force the GenAI model to disregard its security protocol through prompt inputs. The terms "Prompt Injection" and "Jailbreaking" are often used interchangeably.

Both attacks use malicious prompts, which the GenAI model processes. These contents do not have to be human-readable, for example, when attached to a file. This could lead to the GenAI model producing unintended outputs that deviate from its normal behavior, such as the disclosure of sensitive information, incorrect or biased results, and influencing critical decision-making processes. For example, a chatbot is “tricked” into selling a brand-new car for $ 1 using prompt injection attacks.

Model/Workload Hijacking

Model or Workload Hijacking occurs when attackers take over a GenAI model or workload without authorization and use it without the owner’s permission. This could be done by getting the credentials to gain unauthorized access to the GenAI model or the workload. One of the most famous examples of this attack is LLMjacking, where attackers hijack a large language model (LLM).

The hijacking attack could have several consequences, such as monetary loss, as it would increase the model's usage beyond its usual level, and the cloud service providers' GenAI services charge fees based on usage. Another consequence would be access to confidential information and GenAI resources that could be modified, deleted, or leaked to the public unauthorizedly.

Data Poisoning

Data Poisoning aims to corrupt the output or performance of the GenAI model by introducing malicious data points during the pre-training or fine-tuning stage, for example, through an unverified external dataset or malicious content. Tampering with the dataset used by the model can lead to several results, such as less accurate output, reduced performance of the model, and compromised model security.

Model/Asset Theft

The theft of the GenAI model or the assets used for or within the GenAI workloads, such as the datasets, programming code, or the database, can be done as the attackers gain unauthorized access to the cloud infrastructure hosting the model or the assets. The attackers could, in theory, recreate the GenAI model or workload based on the stolen model or assets, which could result in the affected organizations losing the “edge” of their GenAI models or workloads.

How to Secure Cloud GenAI Workloads

Securing cloud GenAI workloads requires a multi-layered approach that addresses the unique risks associated with this technology. Here are some crucial steps organizations can take:

- Implement strong authentication mechanisms, including multi-factor authentication (MFA), and enforce strict role-based access control (RBAC) to limit who can interact with GenAI models, data, and related infrastructure.

- Validate and sanitize prompt inputs to prevent prompt injection attacks, such as filtering out potentially malicious or unexpected inputs before they are processed by the GenAI model.

- Continuously monitor the outputs generated by GenAI models for unexpected, harmful, or sensitive content to ensure the model aligns with expected behavior and security policies.

- Implement security measures to protect the integrity of the GenAI models themselves. This includes monitoring for unauthorized modifications, implementing version control, and potentially using techniques like watermarking to detect tampering or unauthorized copying.

- Secure the APIs used to interact with cloud-based GenAI services facing the users through authentication and authorization, rate limiting to prevent abuse, and monitoring API traffic for suspicious activity.

- Create and implement the necessary security strategy and incident response playbooks to be implemented for the GenAI workloads.

- Conduct regular security audits and penetration testing targeting GenAI workloads and their underlying infrastructure to measure the security and cyber resilience posture of the workloads and identify and remediate potential security vulnerabilities.

How Mitigant Can Help to Secure Cloud GenAI Workloads

Mitigant Security for GenAI platform offers a proactive cloud security approach designed to help organizations secure their cloud-native infrastructures and cloud GenAI workloads effectively. Our comprehensive approach addresses the various threats outlined above:

- AI Security Posture Management: Mitigant can detect and remediate security vulnerabilities in the cloud GenAI workloads due to misconfigurations and compliance violations. Organizations can also monitor the cloud GenAI workloads by detecting changes happening within workloads for potential malicious activities and their compliance with cloud security regulations and best practices, such as ISO 27001, CIS Benchmark, and SOC2.

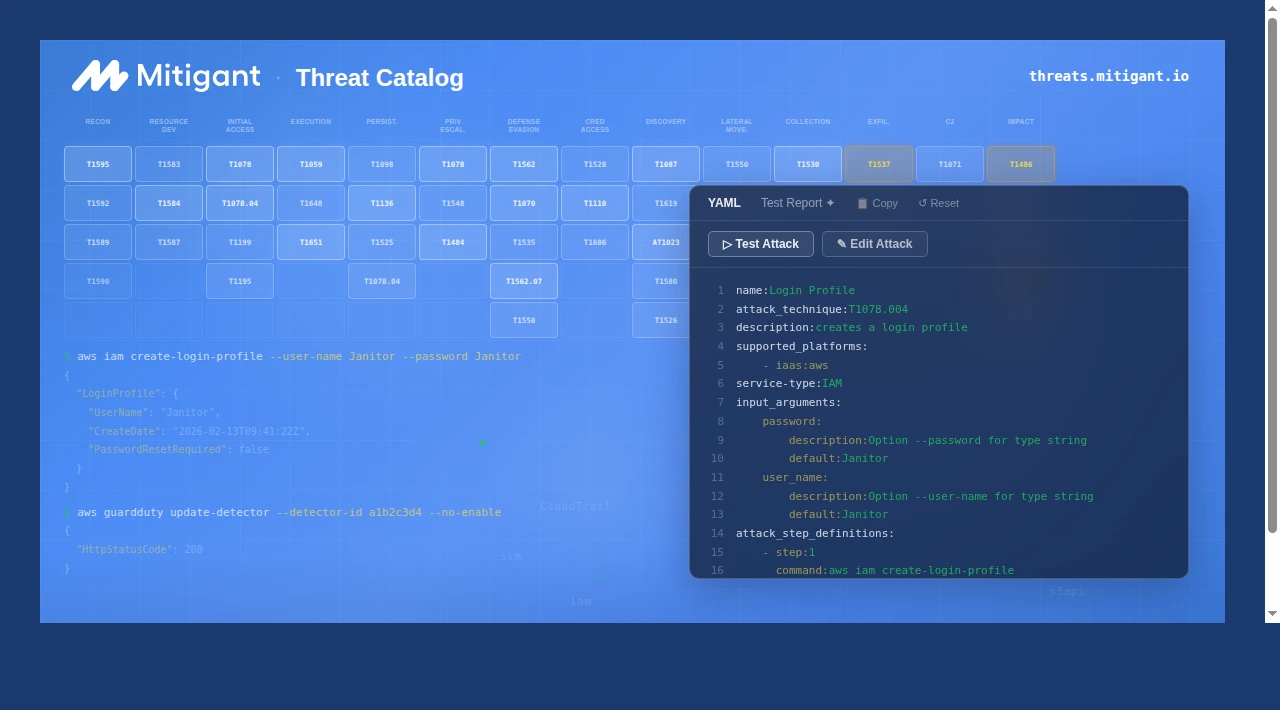

- GenAI Red Teaming: Mitigant can emulate various threats targeting the cloud GenAI workloads to verify the readiness and the resilience of the workloads and cloud infrastructures against potential threats, such as LLMJacking, ransomware attacks, and data breaches. The emulated threats are based on the MITRE ATT&CK and MITRE ATLAS frameworks that will help organizations discover and remediate security blind spots within the cloud GenAI security strategy and incident playbooks.

Sign up at mitigant.io/sign-up for a free trial to proactively protect your cloud and AI from cyber threats or book a meeting with our cloud security experts to discuss your cloud security requirements and how Mitigant can accelerate your cloud security journey at mitigant.io/book-demo.

.png)