Bedrock or Bedsand: Attacking Amazon Bedrock’s Achilles Heel

Introduction

Organizations increasingly leverage Amazon Bedrock to power their Generative AI (GenAI) applications. Amazon Bedrock provides access to several Foundation Models (FMs) supplied by leading AI companies, including A121 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon. Bedrock employs several AI techniques, e.g., fine-tuning and RAG, to empower organizations to build innovative GenAI applications without undergoing rigorous AI processes. Furthermore, Bedrock is serverless, relieving users of infrastructure orchestration and maintenance. However, a firm understanding of the shared responsibility model and its peculiar application to Bedrock is imperative for maintaining a healthy cloud security posture. The risks Bedrock introduces using AWS S3 in the Knowledge Base for the Bedrock component are central to this understanding. This critical Bedrock component manages data retrieval and processing amongst the core Amazon Bedrock components. The vital role played by S3 as a data source is Bedrock's Achilles heel; it introduces several attack vectors, including data poisoning, denial of service, data breach, and S3 ransomware. This blog post delves into these attack vectors, foreseeable impact, detection opportunities, and mitigations.

Amazon Bedrock - The Generative Artificial Intelligence Genie

Amazon Bedrock was launched with a single objective - to be the genie of GenAI! True to this ambition, Bedrock provides easy access to several Foundation Models (FMs) produced by leading AI companies, including Amazon. With a few button clicks, you can access FMs from A121 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, etc. Armed with these FMs, organizations can build innovative GenAI applications, provide innovative services and gain competitive advantages. Amazon Bedrock is a serverless service; organizations do not need to manage the infrastructure required to bring their GenAI dreams to fruition.

Furthermore, Bedrock facilitates fine-tuning of FMs and provides seamless access to examine Retrieval Augmented Generation (RAG) techniques. Ultimately, Bedrock abstracts the complexities of GenAI, allowing developers with little or no GenAI skills to build applications quickly. Let's briefly examine RAG, a powerful technique leveraged by Amazon Bedrock.

Bedrock’s Magic - Retrieval Augmented Generation

Retrieval Augmented Generation (RAG) is an advanced technique in Natural Language Processing (NLP) that combines the capabilities of retrieval-based and generation-based models. Instead of relying solely on pre-trained models, RAG enhances responses by retrieving relevant information from external data sources, such as databases, documents, or web pages, and integrating it into the generated text. This approach significantly improves the accuracy, relevance, and informativeness of the responses provided by Large Language Models (LLMs).

Amazon Bedrock integrates RAG to deliver superior GenAI capabilities, thereby extending the powerful capabilities of LLMs to specific domains such as organizational internal knowledge bases, specialized chatbots, and digital assistants. Bedrock’s implementation of RAG involves the following steps:

- Query Processing: When a query is received, Bedrock processes it using pre-trained LLMs.

- Data Retrieval: Based on the query context, the system retrieves relevant information from various data sources, such as S3 buckets, Amazon Kendra, and Amazon OpenSearch.

- Response Generation: The retrieved data is then used to augment the response generated by the LLM, ensuring it is both contextually accurate and informative.

- Final Output: The final output is a synthesized response that combines the LLM's generative capabilities with the retrieved information, providing users with high-quality answers.

Bedrock’s RAG Architecture - A Quick Primer

Amazon Bedrock's architecture relies heavily on S3 for storing and retrieving documents used by LLMs, which are then accessed and processed by the Bedrock Knowledge Base and Bedrock Agents.

Bedrock Agents: Bedrock Agents interact with the Knowledge Base and retrieve the necessary information from S3. When a query is received, an agent identifies the relevant data sources within the Knowledge Base, accesses the corresponding documents in S3, and provides the data to the LLM for response generation. Thus, Agents serve as intermediaries bridging the gap between raw data storage and intelligent query handling.

Bedrock Knowledge Base: Knowledge Bases for Amazon Bedrock is an Amazon Bedrock component that manages and organizes the data required to equip Foundation Models with current, proprietary information. It indexes and categorizes the information, making it easily retrievable by the LLMs during query processing. This structured organization is crucial for efficient data retrieval and response generation.

Data sources: The Knowledge Bases for Amazon Bedrock require data sources to store documents later used for the embedding process. AWS S3 is used as a data source, given its ability to store documents in different formats, including datasets, images, and videos. These documents can include internal company data, public datasets, and other informational resources necessary for the LLMs to provide accurate and contextually relevant answers.

Vector Databases: Bedrock integrates with vector databases like Amazon OpenSearch and Amazon Kendra to enhance data retrieval and processing. These databases index the retrieved data from S3, allowing for efficient and quick access. This indexing process ensures that the information is organized in a way that optimizes search and retrieval operations, making it easier for Bedrock to access and process the data needed to generate accurate and relevant responses.

Bedrock’s Attack Vectors: Exploiting S3 Data Sources

Amazon Bedrock uses S3 as a data source for storing documents required by RAG to provide contextual, up-to-date, and accurate responses. S3 allows easy upload of documents needed for RAG; however, this convenience is a double-edged sword. According to the shared responsibility model, organizations are responsible for managing S3’s security, and this responsibility has proven to be challenging over the years. Conversely, all existing S3 risks apply to Bedrock, with even more severe implications. This introduces two categories of risks: S3-specific and Bedrock-specific risks. S3-specific risks are well known; therefore, we would focus on the Bedrock-specific risks. Given that these risks are already documented in the MITRE ATLAS, the relevant attack techniques will be discussed through its scopes.

Poisoning Training Data

Threat Scenario (MITRE ATLAS AML.T0020): Adversaries might conduct data poisoning attacks after accessing S3 buckets containing Bedrock’s RAG documents. There are different kinds of data poisoning attacks: pre-training data, fine-tuning data, and embedding data. The relevant attack here is the latter: embedding data since the documents in the S3 are used for converting categorical data into numerical representation, which are then used to provide contextual responses to Bedrock agents. Data poisoning attacks inject malicious or nonsensical data into the training dataset, corrupting the LLM and compromising the quality of output.

Impact: Bedrock agents may provide inaccurate or harmful responses, affecting business operations and user trust. The OWASP LLM03 (Training Data Poisoning) provides a vivid example:

A malicious actor or competitor intentionally creates inaccurate or malicious documents that target a model’s training data, training the model simultaneously based on inputs. The victim model trains using this falsified information, reflected in the outputs of generative AI prompts to its consumers.

Data from Information Repositories

Threat Scenario (MITRE ATLAS AML.T0036): Adversaries may take advantage of the information they gather from unauthorized access to the S3 buckets. Given that these buckets might store sensitive information such as intellectual property, internal company data, or other delicate material, access might be advantageous to adversaries. This attack aligns with OWASP top 10 LLMs - LLM06.

Impact: Exposure to critical data could lead to intellectual property theft, competitive disadvantage, or severe reputation damage.

Denial of ML Service

Threat Scenario (MITRE ATLAS AML.T0029): Adversaries can disrupt a GenAI service by launching attacks such as ransomware or resource exhaustion, making the service unavailable. The potential for S3 ransomware is well-known, with a documented Incident response event by Invictus, however the motivation to conduct S3 ransomware attacks will be increased due to the involvement of GenAI workloads. Attackers can also delete these documents and deny legitimate access (bucket takeover). These actions would result in a denial of service, also listed in the OWASP Top 10 LLMs - (LLM04).

Impact: Gaining access to an S3 bucket with embedding data could lead to service downtime and subsequent business disruption, loss of customer trust, financial losses, legal issues, etc.

Attack Detection and Mitigation

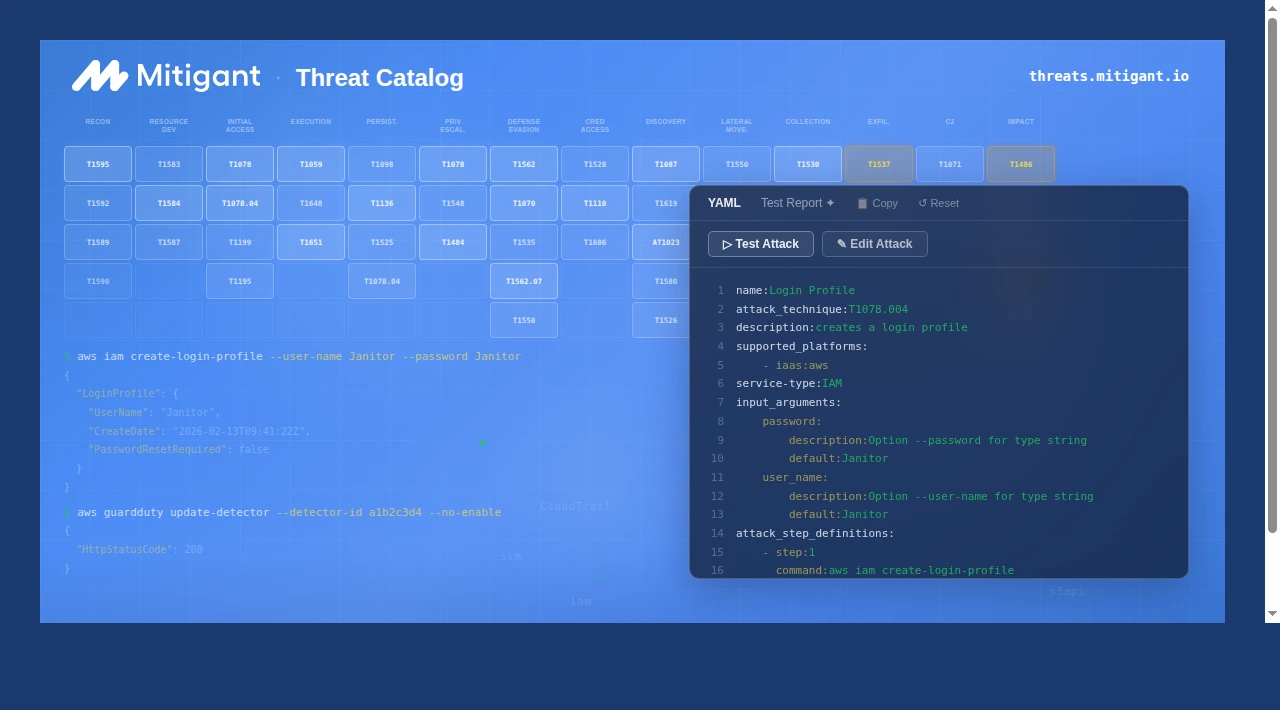

Mitigant Cloud Attack Emulation was leveraged to run several attacks against Amazon Bedrock, it was quite interesting to see that most threat detection systems (XDRs, CDRs) could not detect these attacks. This scenario is what we call “Zero-Day detections,” although it is analogous to Zero-day vulnerabilities, it defines a state where threat detection systems cannot detect attacks due to the non-implementation of commensurate detection logic. The security team must build custom mechanisms to address this detection gap. Additionally, the approaches discussed in the following sections would aid in mitigating Bedrock's highlighted security issues.

Regular Audits and Monitoring: Conduct frequent audits of S3 bucket configurations and access policies to ensure no misconfigured components. Security tools like CSPMs and ITDRs can be helpful.

Threat Detection: Continuous collection and analysis of CloudTrail events would be helpful for threat detection and incident response. Several important CloudTrail events, including ListDataSources, ListAgents, GetDatasource, GetKnowledgeBase, ListFoundationModels, and GetAgent, could be collected and analyzed for prompt alerts when specific events are identified.

Data Encryption: Encrypting data at rest and in transit will prevent unauthorized access and ensure data integrity. The documents needed for Bedrock’s RAG can be encrypted data at rest and in transit; however, this must be integrated into the data retrieval and analysis process. Consequently, efficient key management is required e.g., using secret storage systems like AWS Secret Manager.

Access Controls: Strict access control is critical to Bedrock's security posture. Implementing the principle of least privilege ensures that users and applications have only the permissions they need to perform their functions. Strict access controls are also essential to prevent unauthorized access to the configured S3 buckets.

Adversary Emulation & Red Teaming: Compromised Bedrock components could severely impact an organization's GenAI infrastructure, leading to cost implications, legal drama, and reputational damage. Given the lack of proactive tools, organizations must leverage adversary emulation approaches to validate GenAI's security posture.

GenAI Workload Security with Mitigant Cloud Security Platform

The Mitigant Cloud Security Platform is at the forefront of technologies aimed at protecting GenAI cloud workloads from malicious attackers. Mitigant Cloud Attack Emulation has many attacks that can be used by security teams to quickly run adversary emulation exercises, red/purple teaming engagements, and incident response exercises. These features are timely, and very few security vendors have this capability. Furthermore, the Mitigant CSPM checks GenAI workloads for misconfigured resources and changes that might indicate malicious actions. These changes are detected using an innovative cloud drift management mechanism for quick attack detection.

Sign up for a free trial today and secure you GenAI workloads in the cloud - https://mitigant.io/sign-up

Conclusion

Amazon Bedrock provides enterprises easy access to build and deploy GenAI applications that leverage various LLMs. Enterprises can seamlessly customize these LLMs to suit specific use cases through RAG. Bedrock's innovative RAG architecture has drastically lowered the entry barriers, thus enabling great innovation. However, at the center of these capabilities lies AWS S3, a service known to be ubiquitous and challenging to secure, mainly due to mistakes and misconfigurations. This article demonstrates the dangers of using S3 in Bedrock and how attackers might leverage this gap to compromise GenAI applications. We also provide some recommended countermeasures for detecting and remediating these attack vectors.

.png)