Demystifying Amazon Bedrock LLMJacking Attacks

Introduction

Organizations are adopting GenAI to enhance productivity, innovation, and business advantages. Similarly, cloud service providers now provide GenAI-as-a-Service offerings, e.g., Amazon Bedrock, Azure AI Services, and Google Vertex AI. These GenAI-as-a-Service further accelerate GenAI adoption as they drastically lower the entry barrier for organizations. However, GenAI cloud workloads introduce several security and safety challenges that need to be noted and addressed by adopters. Appropriate countermeasures would enable organizations to ensure Responsible AI systems, especially in light of the increasing call for AI security and safety, e.g., the EU AI Act. Unfortunately, a recent IBM and Oxford Economics report revealed that 70% of executives prioritize innovation over security when considering GenAI strategies. It is, therefore, essential to provide more awareness of practical attacks against GenAI systems with potential impacts.

GenAI Cloud Workloads: The New Juicy Targets

It is not news that attackers follow the money trail, and GenAI cloud workloads are increasingly becoming a vital money trail worthy of attention. Researchers at Wiz gave a glimpse into the current GenAI threat landscape earlier this year when they discovered several attack vectors in SAP AI Core, the premier AI service offered by SAP. The researchers demonstrated how attackers could combine contemporary attack vectors e,g, IAM, with AI-specific attack vectors, e.g., Model poisoning, to conduct sophisticated attacks. This unquestionably demonstrated how complex cloud technologies comprising GenAI workloads raise the bar for cybersecurity.

LLMJacking - A Primer

One of the most prolific attacks against GenAI workloads is LLMJacking. During an LLMJacking attack, cybercriminals illegally gain access to a Large Language Model (LLM) and use it at will. The primary motivation is to avoid the enormous bills accrued when using foundational models offered on cloud platforms (up to $46,000 of LLM consumption/day). Most LLMJacking attacks target Amazon Bedrock, which offers several foundational models from leading AI companies, such as Anthropic, Coherent Meta, and Stability AI.

A recent report from Permiso Security pointed out that Bedrock has been one of the top targeted cloud services in the last six months. LLMJacking attacks are the primary attacks orchestrated. If you are new to Bedrock, including its composition and architecture, and want to learn how to view these from a security standpoint, read our previous article, which described several attack vectors, including data poisoning. Researchers at Sysdig earlier reported LLMJacking attacks and recently published an extended report with more technical details. Furthermore, KrebsonSecurity recently provided additional insights about LLMJacking attacks based on earlier reports. These publications reveal the lucrativeness of LLMJacking for these cyber criminals. A prominent cybercriminal organization behind this attack has generated $1 million in annualized revenue. The business model is the sale of sex chat bots.

LLMJacking can be categorized under the Denial of Wallet (DoW) attacks, where attackers’s massive prompt usage increases the costs of usage (Bills), eventually impacting the overall quality of service or even a Denial-of-Service (imagine if legitimate usage is stopped due to non-payment). Note that the traditional countermeasures for DoW at the application level are ineffective against LLMJacking attacks in the cloud.

LLMJacking - Attack Steps

Let's quickly look at the typical attack steps of an LLMJacking attack based on the actual attacks that have been reported and the possibilities Bedrock exposes.

- Steal AWS Key: The attacker gains unauthorized access to an AWS key in different ways, including buying compromised keys from initial access brokers, using malware, and stealing keys from code repositories. Attackers can also use other kinds of credentials, e.g., IAM roles and not necessarily AWS keys.

- Privilege Analysis: Attackers validate the key's permissions and confirm access to Bedrock using tools like AWS CLI or IAM Policy Simulator.

- Bedrock Reconnaissance: If Bedrock is accessible, attackers enumerate the service to discover the available models, agents, and knowledge bases. This allows them to determine Bedrock's capabilities in that specific region. Note that Bedrock is regional; attackers could just iterate across regions.

- Defense Evasion: Crafty attackers would attempt to remain stealthy, e.g., by checking if Model Invocation Logging is enabled. There are also reports that attacker delete model invocation logs afterwards to clean up their trail.

- Model Subscription: Attackers would seek for models that fit their use case or skillset. If the preferred model is not already subscribed/enabled, they could potentially proceed to enabling access.

- LLMJacking Proper: Attackers could optionally proceed to hijack the available LLMs by directly connecting to them via API and linking to their malicious apps (e.g., role-playing chatbots). It is also possible that the attacker performs AgentJacking if they do not have enough access to the models but can access one or more agents.

Countermeasures

Several approaches could be implemented as countermeasures to prevent or detect LLMJacking attacks. Here are some countermeasures:

- Model Security: Enable only the models you want to use and implement restricted access controls, e.g., using SCPs or IAM policies/roles. It is also important to implement controls around the access needed to subscribe to models or limit access to enabled models to specific identities.

- Logging & Analytics: If the logging is enabled, defenders could have detection opportunities due to the API calls. Several Cloudtrail events are interesting, e.g., ListFoundationModels, ListAgents, GetModel, ListAgents, DeleteModelInvocationLoggingConfiguration, GetModelInvocationLoggingConfiguration, etc. Passively collecting logs isn't very helpful except for forensic analysis, so a more practical approach is active collection and analysis. Enabling model invocation logging is also essential, allowing visibility into API calls made against the foundational models.

- Billing Monitoring: Once the actual LLMJacking attack is initiated, billing monitoring could be an effective means of detection. However, this might not be very effective if the victim organization heavily uses LLMs, as the bills might be perceived as normal.

- Least Privilege: Tightening access controls to Bedrock is a critical defensive measure and a known best practice. IAM policies and roles can be defined on a Least-Privilege basis to limit the blast radius of a compromise.

- Configuration Change Monitoring: Monitor for configuration and resource changes, e.g., new models, prompts, and applications deployed on Bedrock. While this might not be directly relevant to the currently reported attacks, it could identify future variants of the attacks as they evolve.

- Bedrock Guardrails: Implementing the built-in harmful filter feature provided via Bedrock Guardrails could block out specific attacker prompts that violate the policies and allow defenders to notice these unusual prompts. Bedrock guardrails provide capabilities that can be used to detect and block user inputs and FM responses considered as harmful, inappropriate or other diverse content types.

Safe and Secure GenAI with Mitigant

GenAI is undoubtedly innovative, and while most organizations aspire to leverage its potential, the security and safety requirements are a responsibility that should not be ignored. This is why the Mitigant Security Platform empowers organizations to use GenAI safely and securely in the following ways easily:

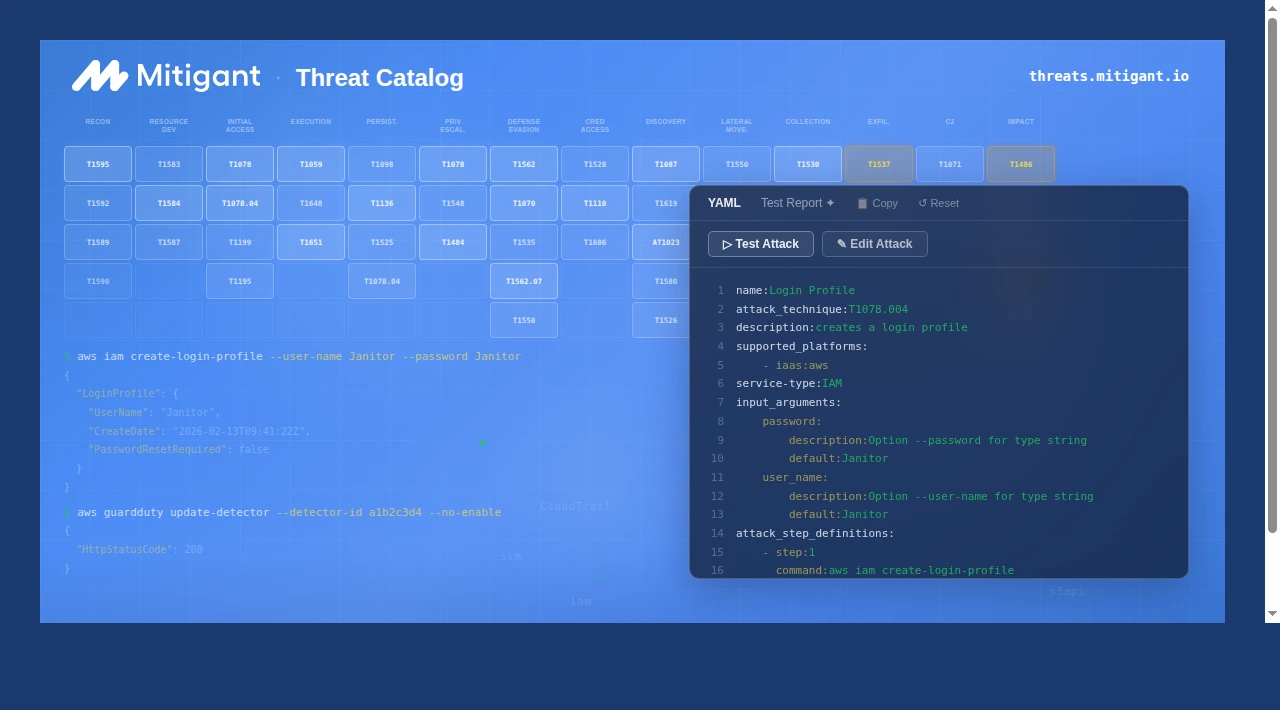

AI Red Teaming

AI red teaming is a foundational aspect of GenAI security. Organizations adopting GenAI must have the means to quickly and continuously run red teaming exercises against LLMs and other components of GenAI infrastructure to ensure responses are delivered as expected. Mitigant Attack Emulation for GenAI integrates into the fabric of AWS GenAI services, allowing automation of AI Red teaming. It empowers organizations to quickly test all Bedrock capabilities using over 20 attacks mapped to the MITRE ATLAS. See a quick demo of how to run a data extraction attack against Bedrock with Mitigant - Amazon Bedrock Data Exfiltration Attack . These attacks also allow organizations to validate security tools' detection and response capabilities, e.g., Cloud Detection and Response.

AI Security Posture Management

Several security posture management approaches, including CSPM and DSPM, are used to manage diverse aspects of the cloud. These approaches are not suited to address AI-specific gaps; hence, there is a need for a specific approach: AI Security Posture Management (AISPM). With AISPM, organizations can quickly check for misconfigured GenAI cloud workloads and ensure that security best practices are implemented. Furthermore, organizations would leverage AISPM to monitor and ensure adherence to regulatory and compliance requirements, such as the EU AI Act. Leverage the Mitigant AISPM to quickly and continuously monitor and identify GenAI misconfigurations.

Sign up for your one-month free trial today and stay ahead of cloud attacks aimed at GenAI workloads, such as LLMJacking.

.png)